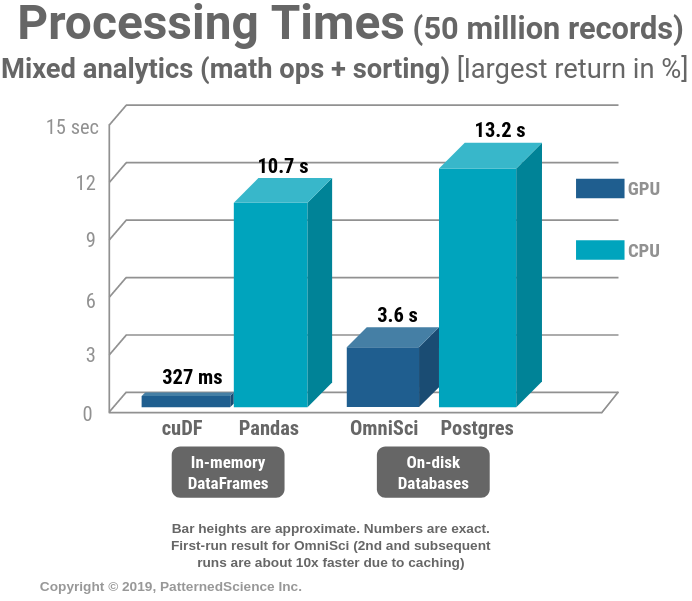

Talk/Demo: Supercharging Analytics with GPUs: OmniSci/cuDF vs Postgres/ Pandas/PDAL | Masood Khosroshahy (Krohy) — Senior Solution Architect (AI & Big Data)

Pandas DataFrame Tutorial - Beginner's Guide to GPU Accelerated DataFrames in Python | NVIDIA Technical Blog

Python Pandas Tutorial – Beginner's Guide to GPU Accelerated DataFrames for Pandas Users | NVIDIA Technical Blog

Pandas DataFrame Tutorial - Beginner's Guide to GPU Accelerated DataFrames in Python | NVIDIA Technical Blog

An Introduction to GPU DataFrames for Pandas Users - Data Science of the Day - NVIDIA Developer Forums